JRiver vs JPLAY Test Results

Recommended reading first The reason is that I am not going to reiterate the baseline components and measurements of my test gear already covered in that post.

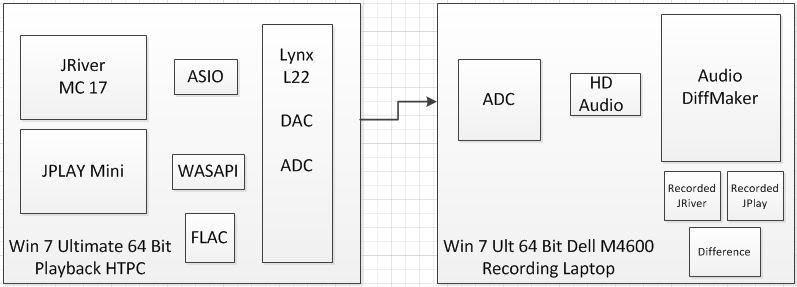

Here is a high level block diagram of my test setup:

On the left side is my HTPC with both JRiver MC 17 and JPLAY mini installed. The test FLAC file is the same Tom Petty and The Heartbreakers, Refugee at 24/96 that I have been using for my FLAC vs WAV tests.

JRiver is set up for bit perfect playback with no DSP, resampling, or anything else in the chain, as per my previous tests:

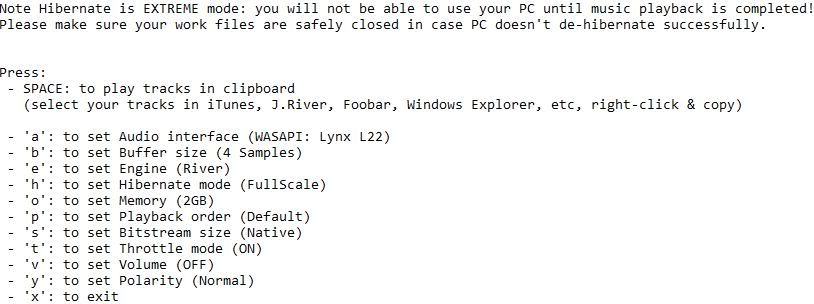

JPLAY mini is set up in Hibernate mode and the following parameters:

On the right hand side of the diagram, I am using Audio DiffMaker Audio DiffMaker for recording the analog waveforms off my Lynx L22 analog outputs of my playback HTPC. All sample rates for the tests are at 24/96.

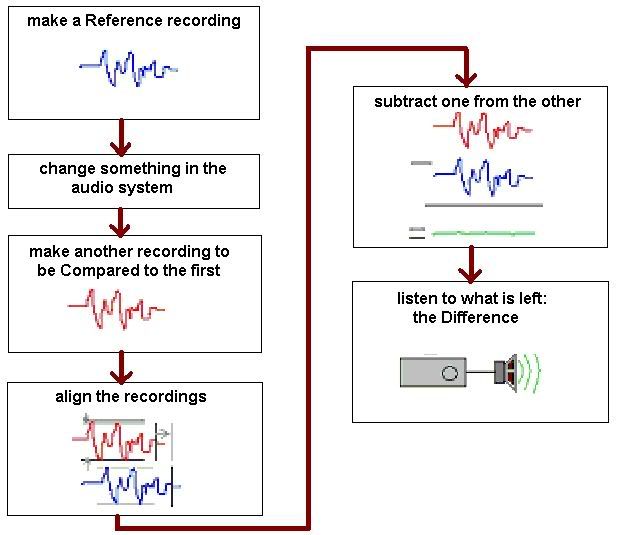

Here is the differencing process used by Audio DiffMaker:

Audio DiffMaker comes with an excellent help file that is worth the time reading in order to get repeatable results. One tip is to ensure both recordings are within a second of each other.

As an aside, this software can be used to objectively evaluate anything in your audio playback that you have changed. Whether that be a SSD, power supply, DAC, interconnects, and of course music players.

My assertion is that if you are audibly hearing a difference when you change something in your audio system (ABX testing), the audio waveform must have changed, and if it has changed, it can be objectively measured. I find there is a direct correlation between what I hear and what I measure and vice versa. I want a balanced view between subjective and objective test results.

First, I used JRiver as the reference and I recorded about 40 seconds of TP’s Refugee onto my laptop using DiffMaker. Then I used JPLAY mini, in hibernate mode, and recorded 40 seconds again onto the laptop. I did this without touching anything on either the playback machine or the recording laptop aside from launching each music player separately.

Just to be clear what is going on, the music players are loading the FLAC file from my hard drive and performing a Digital to Analog conversion and then though the analog line output stage. I am going from balanced outs from the Lynx L22 to unbalanced ins on my Dell, through the ADC, being recorded by Audio DiffMaker.

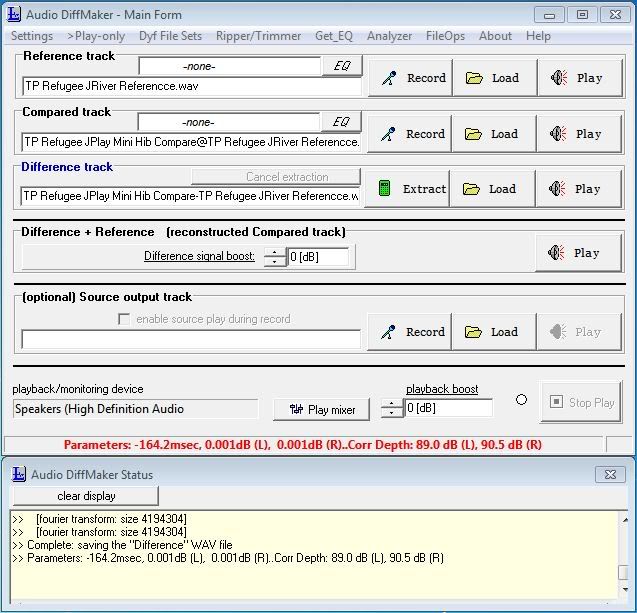

Clicking on Extract in Audio DiffMaker to get the Difference produces this result:

As you can see, it is similar to when I compared FLAC vs WAV. What the result is saying is that the Difference signal between the two music players is at -90 dB. I repeated this process several times and obtained the same results.

You can listen to the Difference file yourself as it is attached to this post. PLEASE BE CAREFUL as you will need to turn up the volume (likely to max) to hear anything. I suggest first playing at a low level to ensure there are no loud artifacts while playing back and then increasing the volume.

As you can hear from yourself, a faint track of the music, that nulls itself out completely halfway through the track and slowly drifts back into being barely audible at the end.

According to the DiffMaker documentation, this is called sample rate drift and there is a checkbox in the settings to compensate for this drift.

“Any test in which the signal rate (such as clock speed for a digital source, or tape speed or turntable speed for an analog source) is not constant can result in a large and audible residual level in the Difference track. This is usually heard as a weak version of the Reference track that is present over only a portion of the Difference track, normally dropping into silence midway through the track, then becoming perceptible again toward the end. When severe, it can sound like a "flanging" effect in the high frequencies over the length of the track. For this reason, it is best to allow DiffMaker to compensate for sample rate drift. The default setting is to allow this compensation, with an accuracy level of "4".”

Of course this makes sense as I used a different computer to record on versus the playback computer and I did not have the two sample rate clocks locked together. The DiffMaker software recommends this approach, but I have no way of synching the sample rate clock on the Dell with my Lynx card.

Given that the Difference signal is -90 dB from the reference and that the noise level of my Dell sound card is -86 dB, we are at the limits of my test gear. A -90 dB signal is inaudible compared to the reference signal level.

I am not going to reiterate my subjective listening test approach as I covered it off in my FLAC vs WAV post.

In conclusion, using my ears and measurement software, on my system, I cannot hear or (significantly) measure any difference between JRiver and JPLAY mini (in hibernate mode).

April 2, 2013 Updated testing of JRiver vs JPLAY, including JPLAY ASIO drivers for JRiver and Foobar plus comparing Beach and River JPLAY engines. Results = bit-perfect.

June 13, 2013 Archimago's Musings: MEASUREMENTS: Part II: Bit-Perfect Audiophile Music Players - JPLAY (Windows). "Bottom line: With a reasonably standard set-up as described, using a current-generation (2013) asynchronous USB DAC, there appears to be no benefit with the use of JPLAY over any of the standard bit-perfect Windows players tested previously in terms of measured sonic output. Nor could I say that subjectively I heard a difference through the headphones." Good job Archimago!

Interested in what is audible relative to bit-perfect? Try Fun With Digital Audio - Bit Perfect Audibility Testing. For jitter, try Cranesong's jitter test.

Happy listening!<p><a href="

64 Comments

Recommended Comments